How to train Large Language Models? - Part 2

The Art of Tokenization: Harnessing its Potential for Large Language Models

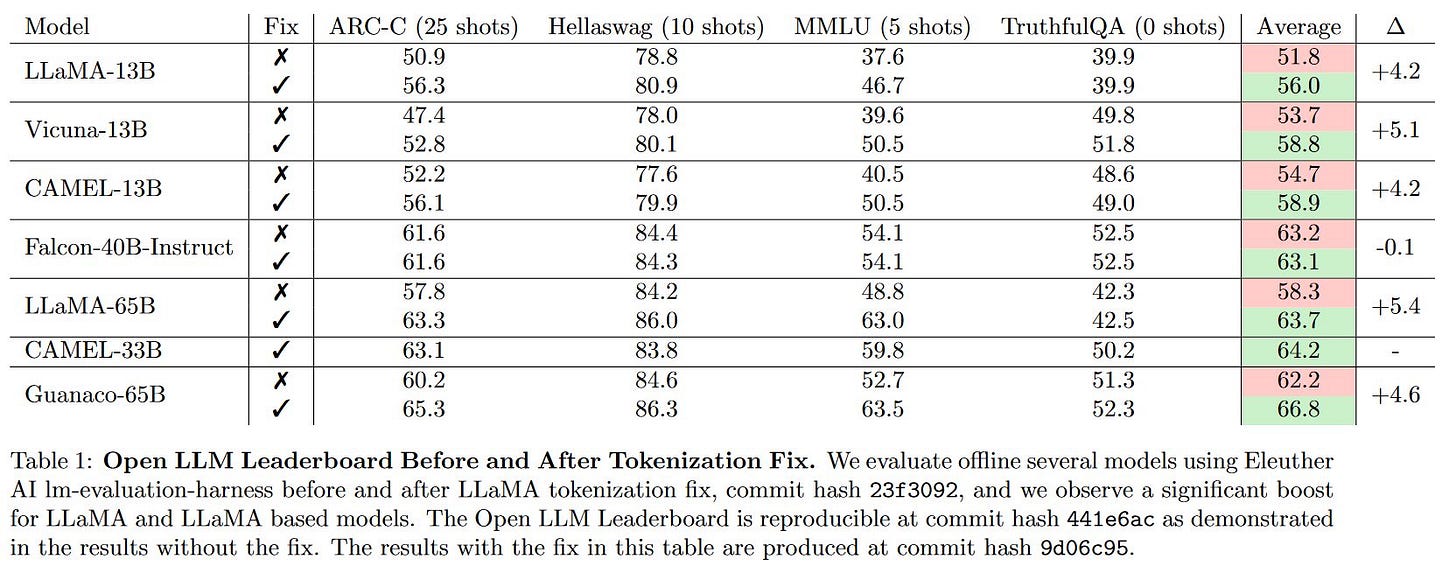

Tokenization, a critical step in natural language processing, can have a significant impact on the performance of language models. This was evident in the case of the Llama model, which initially achieved impressive results on various benchmarks, including MMLU. However, when independent hackers attempted to replicate Llama's success, they were unable to achieve the same level of performance. As a result, Falcon models took the spotlight on the OpenLLM leaderboard, dethroning Llama. However, by carefully investigating and addressing a bug in the tokenization process, Yao Fu and colleagues were able to restore Llama's performance to match the results described in the original paper. The bug in the tokenization process had caused a decrease in performance of approximately 5% across different tasks, highlighting the critical importance of tokenization. In this article, we will explore the significance of selecting the right tokenizer and its impact on achieving optimal performance in language models.

Tokens and Embeddings

In our previous discussion, we explored how to design datasets for training Large Language Models. However, neural networks don't understand text the same way we humans do. That's why we need to convert words into smaller units called tokens. Tokens are like building blocks that these models can work with. We represent each token as a list of random floating point numbers called embeddings. These embeddings help the model understand the meaning and context of the tokens.

The model consume these token embeddings and then make predictions about the probability of the next token. It's like a machine that takes in the token embeddings and tells us the likelihood of the next token.

In this article, we're going to dive deeper into the process of converting words into tokens. It's a crucial step in training Large Language Models, and understanding it better will help us make better use of these powerful models.

Vocabulary Size

How many tokens should be in our vocabulary? That's an important question when it comes to Large Language Models. Tokens are the building blocks that these models use for computation. The number of unique tokens a model can generate is its vocabulary. But figuring out the right vocabulary size can be tricky.

If we consider each byte as a separate token, our vocabulary would have 256 tokens. If we consider each unicode character as a separate token, the vocabulary size jumps to around 130,000. That's a lot! But if we consider unique sentences, words, or even just sub-words as separate tokens, the vocabulary size would increase exponentially. However, having such a large vocabulary would demand a significant amount of memory and greatly increase the cost of training the model.

Then we might think that using bytes as tokens would solve the problem, but it comes with its own challenges. It would mean the model needs to use a lot more tokens just to understand a single sentence. This would limit the model's ability to reason over multiple sentences. So, striking the right balance between vocabulary size and the model's context length (the number of tokens it can work with) is crucial.

In the past, models used a vocabulary made up of a list of words. If a model encountered a word that wasn't in its vocabulary, it would replace it with a special <UNK> token. But this had limitations, especially when it came to domain-specific tasks where the model needed to understand and generate domain-specific words. To overcome this, researchers came up with smart methods to combine different characters together to create a vocabulary without losing any information. To reduce the number of character-level tokens (since there are so many of them), some characters are added directly to the vocabulary, while others are broken down into smaller parts called UTF-8 bytes, and each byte gets its own token.

Having vocabulary size divisible by 128 can make training more efficient, with a 25% speedup reported in the case of nanoGPT. So, when designing a vocabulary, it's a good idea to consider GPU efficiency and choose a size that works well with the hardware. Creating an optimal vocabulary for a language model requires careful thought and balancing performance and efficiency. In the upcoming sections, we will explore different approaches and factors to consider when designing a vocabulary.

Tokenizer training strategies

There are several strategies used for training tokenizers to generate subword tokens. These strategies include SentencePiece, Unigram, Byte-Pair Encoding (BPE), and WordPiece.

Let's take a closer look at each of them:

SentencePiece

If your text doesn't have spaces separating words (such as Chinese, Japanese, or Thai scripts), SentencePiece tokenizer is used. It treats the text as a continuous stream of tokens and then applies either Unigram, BPE, or WordPiece tokenization to generate the vocabulary. Models like T5 (Vocabulary size = 32,000), Palm (256,000), NLLB (256,000), replit-code-v1-3b (32,768) and IndicBART (64,000) use SentencePiece tokenizer.

Unigram

Unigram tokenizer starts with pre-tokenized words and common subwords from the dataset as its base vocabulary. It uses log-likelihood loss to determine the impact of removing a token from the vocabulary. It iteratively removes the tokens with minimal impact on overall loss until the desired vocabulary size is reached. Unigram tokenizer is commonly used with SentencePiece tokenizer and is rarely used on its own. However, BloombergGPT (65,536) utilizes Unigram tokenizer without using SentencePiece.

Byte-Pair Encoding (BPE)

BPE tokenizer employs a pre-tokenizer like Spacy or Moses to divide the text into words. It creates a base vocabulary that only includes characters present in the training data. It then groups frequently occurring characters together and adds them to the vocabulary until the desired vocabulary size is achieved.

Byte-level BPE

Considering the large number of unicode characters (over 130,000), Byte-level BPE treats each byte as a token in its base vocabulary and uses merge rules to construct an extended vocabulary. Models such as GPT-2 (50,257), GPT-3 (50,257), OPT (50,257), Llama (32,000), Bloom (250,880), GPT-NeoX-20B (50,257), Pythia (50,257), MPT (50,257) and StarCoder (49,152) use Byte-level BPE tokenizer.

Wordpiece

WordPiece tokenizer is similar to BPE. It also employs pre-tokenizers to divide the text into words and creates a base vocabulary of characters. However, with WordPiece, the merge rules evaluate the likelihood of resulting tokens rather than just their frequency. BERT (30,000) utilizes WordPiece tokenizer.

These different tokenizer training strategies offer flexibility and adaptability in handling various types of text data. Choosing the most suitable tokenizer depends on the specific requirements and characteristics of the language or script being processed.

Low resource languages

The number of tokens required to represent a sentence can vary significantly between languages. For example, the sentence "Why AI will save the world" is converted into only 6 tokens using a specific tokenizer (tiktoken) used by GPT models. However, its Hindi translation, "एआई दुनिया को क्यों बचाएगा," requires 41 tokens.1 That's around 7 times more tokens! This ratio is even worse for other languages like Kannada or Burmese. Having more tokens means a smaller context available for reasoning, increased computational cost, and additional time delay in generating similar text.

The histograms below analyze the performance of the tiktoken tokenizer (used by GPT models) and Bloom tokenizer across different languages.2 A tall and narrow curve on the histogram indicates that fewer tokens are required to tokenize the language, while a short and wide curve suggests a larger number of tokens needed for tokenization.

As you observe from above histograms, GPT models consume a significantly higher number of tokens for non-English languages, whereas with Bloom, the number of tokens is more consistent across languages.

When training language models for different use cases, it is essential to consider the languages that users prefer to interact in and adjust the tokenizer accordingly. Here are some strategies to tackle low-resource languages:

Sampling

During tokenizer training, we can use a subset of training data that reflects the language distribution in the overall dataset. However, if we care about extremely low-resource languages, uniform sampling might result in an over-representation of higher-resource languages. In such cases, temperature sampling can be used to downsample high-resource languages and upsample low-resource languages. NLLB and IndicBART use temperature=5 for sampling datasets.

Script selection

If the languages of interest belong to a single language family and share vocabulary across scripts, mapping all the languages to a dominant script can facilitate transfer learning. For example, IndicBART maps eleven Indic languages to the Devanagari script.

Vocabulary Size

The size of the vocabulary plays an important role in the tokenization process. A larger vocabulary reduces the risk of segmenting entire sentences, but it also increases the model's size. Finding the right balance between vocabulary capacity and model size requires experimentation.

Validation of multi-lingual tokenizer

To assess the effectiveness of a trained multi-lingual tokenizer, we can compare its fertility (the number of subwords created per word or dataset) with respective mono-lingual tokenizers. If the multi-lingual tokenizer generates significantly more subwords for a specific language than a tokenizer specifically trained on that language, it means the tokenizer is dividing words more aggressively. Ensuring a tokenizer's fertility aligns well with the desired expectations is crucial. Bloom targeted less than a 10% fertility degradation

By considering these strategies, we can better handle the challenges of tokenizing low-resource languages and achieve more consistent tokenization results across different languages.

Limitations of Tokenizers

Tokenization is an important step in training large language models, but it also comes with some limitations that can affect the model's performance. Let's take a look at a few of these limitations:

Trailing whitespace errors

Accidentally adding whitespaces at the end of the prompt can result in different embeddings being generated compared to the prompt without whitespaces. Since the model hasn't been trained with whitespace sequences, it can lead to unexpected outputs.

Character manipulation tasks

Large language models operate at the token level, which means they struggle with tasks that require manipulating individual characters when those characters are not divided into separate tokens.

Weird tokens

Certain tokens like “SolidGoldMagikarp” or “TheNitromeFan” that are more common during tokenizer training than in model training can lead to strange behaviors like evasion, insults, or hallucinations from the model. This increases the risk of "jail-breaking" the models.

Additional modalities

If we want to incorporate other modalities like video or audio, tokenization becomes more complex. It involves clustering or using discrete auto-encoders to compress the input, which can result in lossy compression and might limit the model's performance.

As we look to the future, exciting advancements in model architecture, like MegaByte, offer the promise of training large language models without the need to worry about tokenizer training. Imagine models that can handle incredibly long sequences of hundreds of millions or even billions of bytes or characters effortlessly. However, until these amazing advances become a reality, let's focus on training our tokenizers and stay prepared for the incredible possibilities that lie ahead.

Cheers,

Sachin

s16r.com

We can count number of tokens generated by tiktoken on this OpenAI platform.

You can also generate similar histograms using the HuggingFace space.